In a paper by Pankil Shah, EnterpriseDB which sells a paid version of PostGreSQL, tries to compare Microsoft SQL Server to PostGreSQL…

https://www.enterprisedb.com/blog/microsoft-sql-server-mssql-vs-postgresql-comparison-details-what-differences

Several false informations are given in this paper…

That’s why I decided to publish a correction to these data

Published 2021-04-03

Who am I?

At the moment I am 60 years old, and have roam in the database field since the very beginning. In my country (France) I am considered as an expert in RDBMS and for the SQL language. I have worked, since I am on the businees, with RDBMS Ingres (CA), DB2 (LUW), InterBase (Borland), Gupta SQL, Informix, Oracle, Sybase SQL Server, Sybase ASA (Watcom), RDB (Digital), MS SQL Server, PostGreSQL and MySQL…

After having worked for many IT companies or IT editors, I decide to found my own one in 2007 called SQL Spot in the French Riviera. By the way, I travel in France, Europe and some more over, to give my advices, assess and audits for small or big enterprises that’s need to be helped to decide, perfect and take full advantage of their RDBMS… At the same time, I gave courses in engineering schools as well as at the university

I am still active and as a man of experience, I know how to take sufficient distance to assess the risks and benefits of a particular system.

Some people think that I am acting against PostGreSQL … But as the saying goes « who likes well, catches well« . PostGreSQL must have its place in the sun, but its rightful place. Considering it as equal to an Oracle, an IBM DB2 or a SQL Server can prove to be catastrophic in certain cases, and in particular when the technical and functional preliminary studies have not been seriously established.

Some PostGreSQL fundamentalists have succeeded in introducing their favorite DBMS without any prior study or without any proof of concept, benchmarks or simple tests and this has often resulted in catastrophic adventures with a costly backtracking (case of Agic Arco retirement insurance in France for example), an abandonment of the solution after years of hardship (case of CAF in France for example which recently returned to the bosom of Oracle, or Altavia in which a newly CTO attempted to migrate from SQL Server to PostGreSQL without success…), or even expensive solutions in terms of operation, such as this is the case for the French website « Le Bon Coin » which uses more than a 70 PostGreSQL server and therefore near a hundred databases where all the others use only one server and one single database. Le Bon Coin having wisely opted for SQL Server for the BI part of its analytical service (with only one single server) against any solution based on PostGreSQL …

But it is clear that in some companies, a few postGreSQL ayatollahs come to impose an inadequate solution, even if it costs more than what it is supposed to bring, but on purpose, in order to keep a « private reserve » and become the king of data, to the detriment of users and the quality of service that we are entitled to expect from good corporate IT!

It is therefore in order not to have disappointments and specially to decide in full knowledge of the facts, that I invite you to read this corrigendum, which details the differences between PostGreSQL and Microsoft SQL Server in order to guide your choice.

Finally, I want to say that I have no professional connections with Microsoft and I do not have any MS stock market action and I have never sold any MS product, so I do not profit from Microsoft in any kind. I am a simple professional user of Microsoft products, database expert and specialized in SQL Server … Yes, I was a MS MVP (Most Valuable Professional) for many years, recently ended, and in this case I have been invited at Redmond (but I have paid my own ticket plane), to be informed, first of all, about what’s new in Microsoft SQL Server, exactly as the PG community does, with the PG days, in which I have participated by the past.

What is the difference between PostgreSQL and SQL Server licensing? Comparison of PostgreSQL vs. MSSQL Server licensing model

The author gives the licensing price for only 2 versions of SQL Server (Standard and Enterprise).

But there is almost 4 different versions on premise and one in the cloud.

One version, SQL Server Express is free but limited at 10 Gb of relational data per DB, with a maximum of 32760 databases. Non relational data are unlimited (FILESTREAM, Filetable…).

Another version called Web that can be used only in SLA mode, is about 50 $ per month. The limits are on hardware resources (max 4 socket 16 cores / 32 with hyperthreading and 64 Gb RAM for classical relational table + 16 Gb of « in memory » table that PostGreSQL doesn’t have + 16 Gb of vertical indexing (columnstore) that PG doesn’t have).

EnterpriseDB, sales a paid version of PostGreSQL that corrects some disadvantages of the free version, like the lack of query hint…

Another important difference (omitted) is that SQL Server come with a full stack of operational services like:

- another dbengine for BI : cube and datamining (call SSAS : SQL Server Analysis Services)

- an ETL named SSIS (SQL Server Integration Services – World record: 1 Tb in 30 minutes)

- a solution for reporting call SSRS (SQL Server Reporting Services)

- a solution for self BI call Power BI (only available in the Enterprise edition)

With no needs to paid extra for anything….

No one of theses solutions (ETL, reporting, BI, data mining….) are available with PostGreSQL. But some solutions exist has free with some limitations that are not suitable for enterprise purpose (as an example, the free ETL Talend has some restricted functionality which requires a paid version to use it when you have a great amount of data… parallelism). Of course all those disparate solution are less well integrated becauses they came from different software vendors and causes some troubles that are unknown in the Microsoft world (which has always made it its strength)…

If you want Enterprise professional solutions, you have to pay EnterpriseDB or Fujitsu…

Which of PostgreSQL or SQL Server is easier to use? Compare the ease of use of PostgreSQL vs. MSSQL

To remain complete, the origin of SQL Server is from Sybase SQL Server (born 1983, from INGRES !), and derived in to branches, one for Sybase renamed ASE (Adaptive Server Enterprise) and the Other MS SQL Server. It is true that the first Microsoft version of SQL Server was done in 1989.

The author seems to say that SQL Server has no object relational functionality… This is false. Objects storage can be done by coding in a .net language (CLR) and integrated as datatypes of any database, and these objects can have methods to operate them. All the SQL CLR objects created reside into the database not outside… And all theses objets can be indexed as long as they are « byte ordered ».

In addition, SQL Server has some noSQL features that PostGreSQL does not have : graph tables, big table (up to 30000 columns), « in memory » tables (for the key-value paired tables but not only…), and document storage via FILESTREAM/FileTable with Fulltext and semantic searches.

No one of these features are available in PostGreSQL.

SQL Server has an XML datatype in which XML data can be fully indexed. Indexing XML does not exists in PostGreSQL !

What are the syntax differences between PostgreSQL and SQL Server? Compare PostgreSQL vs. MSSQL Server Syntax

The author shows some old Sybase inherited syntax, with some bad faith, to indicate that syntaxes of SQL Server is abnormal and not portable which il clearly false!

In truth SQL Server can do a

SELECT col1, col2

without brackets, and aliases can be create with the keyword AS (or without). The old syntaxes presented by the author resides for some compatibility reasons, but in my life (40 years of RDBMS uses), I never see some customer using it since more than 20 years !

Also the below syntax given by the author:

SELECT AVG(col1)=avg1

Does not exists in SQL Server and throw an exception:

|

1 2 |

<em><span style="color: #ff0000;">Msg 102, Niveau 15, État 1, Ligne 2</span></em> <em><span style="color: #ff0000;">Incorrect syntax near '='.</span></em> |

Which proves that the author does not even check his own writings… The correct old fashioned and unused syntax is

SELECT avg1=AVG(col1) ...

Finally, when working with dates, you can use the standard ISO SQL function CURRENT_TIMESTAMP (without parenthesis as the standard require).

What are the data types differences between PostgreSQL and SQL Server? Compare data types in PostgreSQL vs. MSSQL

The author committed some errors, by giving some correspondent datatype that are no more used…

In particular, the TEXT datatype is obsolete since 2005 and must be replace by VARCHAR(max) for ASCII style encoding or NVARCHAR(max) for UNICODE style encoding.

The DOUBLE PRECISION that the author mention, does not exists in SQL Server with this name, bust you need to use a REAL which is the strict equivalent.

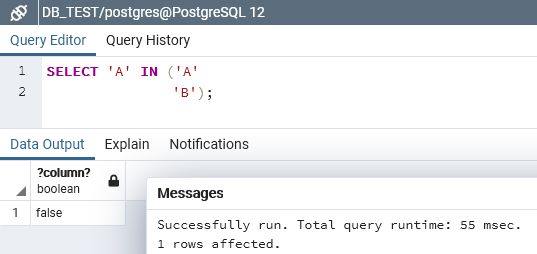

In a matter of UUID or GUID, PostGreSQL does not have any correspondent datatype and store it in a CHAR(16) which as some major disadvantages: using more space than required and does not complies all the features of such a datatype needs like sorting or the inequality comparison…

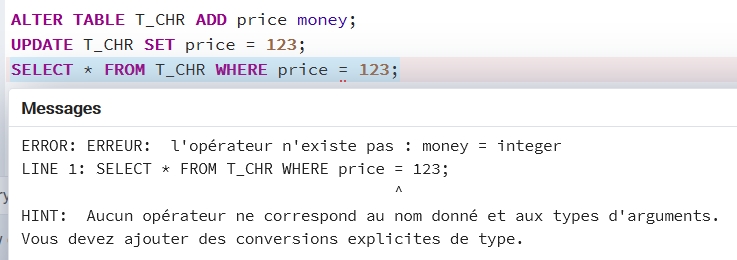

Worse, as we will discuss later, PostGreSQL implicit data casting results some anomalies that can be dangerous…

Another significative difference about datatype is that the bit type of SQL Server (that acts as boolean) is really a bit, not a byte and multiple bits use the same byte up to eight bits. This is to say that using boolean types in SQL Server is eight-time thinner rather than PostGreSQL…. and some features performs radically faster when using a bit datatype in SQL Server rather than a boolean in PostGreSQL

What are the case sensitivity differences between PostgreSQL and SQL Server? Compare collations in PostgreSQL vs. MSSQL

Great mistakes and misunderstood has been deliver in this paragraph.

First of all, MS SQL Server is not case sensitive or case insensitive. The default sensitivity for case, accent, kanatype, wideness and some more features for string collations must be decided when installing an instance of SQL Server.

In SQL Server, the collation settings can be set as the instance level (called « cluster » in PG), at the database level when creating, at the column level when creating or altering a table or a view, and finally the COLLATE operator can be used in any string expression of any query, that PostGreSQL do not support.

SQL Server’s Collations supports 68 different languages and can be CS or CI (case sensitivity), AS or AI (accent and diacritical sensitivity), width sensitive or not (WS), kanatype sensitive or not (KS) for Japanese and also sensitive to ideographic variation or not (VSS), or more simply binary (BIN or BIN2) and finally SC for some supplemental characters like smiley or, at last, UTF-8. PostgreSQL collations are limited to CI/CS and AI/AS.

PostGreSQL don’t have so much functionalities relative to collations and COLLATE operator.

There are over 5,500 collations in SQL Server compare to the very poor number of collation of PostgreSQL. But PostGreSQL recently add ICU collations but theses collations are severely bugged. As an example, SQL operator « LIKE » with an ICU PG collation won’t execute the query and give an astouding « non deterministic » collation error!

This is a real problem when you want to use PostGreSQL with Latin languages that have accents (Europe, South America, North Africa…. A lot of people!)

The problem is also that classical collation based on linux system can corrupt your indexes!

In short, with PostGreSQL the choice of collation is between plague and cholera …

What are the index types differences between PostgreSQL and SQL Server? Compare index types in PostgreSQL vs. MSSQL

First, there is no CLUSTERED index in PostGreSQL, like SQL Server’s one or the IOT of Oracle Database. Clustered indexes have some great advantages in terms of data storage (less bytes, because clustered index leaf pages are stored into the table and not outside – eg no duplicate data) and also in terms of pure performances: especially in joins, because every non clustered indexes have the value of the clustered key (often the PK) and no more requires a table access to do the « seek and join » part of a query…

The author forgets to say that PostGreSQL has recently add the INCLUDE clause to there traditional BTree indexes like that has been done in Microsoft SQL Server since many years (2005) in order to ensure the coverage of the query by the index.

Yes, PG has the advantage to give hash indexing…. PostGreSQL had also recommended to not uses them! Hash index exists also in SQL Server but are dedicated to « in memory » tables.

GiST, GIN and BRIN index types have never been implemented other than in PostGreSQL …. Ask yourself the reason …!

SQL Server also has vertical indexes (columnstore index, since 2012 version), that the author has omitted, and which are intended for indexing very large tables. Such indexes are essential as soon as you must reach hundreds of millions of lines because data stored in such indexes are always compressed and this type of index can be seek in « batch » mode: blocks of data rows accessed in parallel, rather than in the traditional row-by-row mode which subsist in classic indexes such as PostGreSQL uses them … Vertical indexes does exists in the Citus version of PostGreSQL (Citus has been acquired by Microsoft) but they are not working as fast as SQL Server’s one and are dedicated to OLAP not for OLTP databases. Another solution is to change your version of PostGreSQL to a paid and expensive version such as the one sold by Fujitsu …. Also, SQL Server’s columnstore index can support 1024 columns in the index key (traditional BTree index are limited to 32 columns in both SQL Server and PostGreSQL).

Vertcial indexing does not exist in PostGreSQL.

For « in memory » table, SQL Server offers hash indexes and have add a more sophisticated range index called BWTree, that PostGreSQL does not have because PostGreSQL does not support « in memory » tables.

For XML, SQL Server give 4 types of indexes (PRIMARY, FOR PATH, FOR VALUE, FOR PROPERTY) that covers full XML documents, and not only a single partial element that your have to redounds in order to dispose an atomic index value, like this is the case in PostGreSQL! In the facts, PostgreSQL has no XML index at all…

What are the replication differences between PostgreSQL and SQL Server? Compare replication in PostgreSQL vs. MSSQL

The author is completely wrong about this subject, and confuses about what is in the field of high availability (which it did not mention in the paper for SQL Server) and what is in the field of scalability (replication of some data, filtered by rows and columns, to other databases and instances of SQL Server).

For the data replication (not used for High Availability) SQL Server has 8 modes to communicate:

- transactional replication (for read/write parts of tables from a database to many other databases, with no reverse)

- peer to peer replication, based on transactions (for read/write parts of tables from some databases to some other databases, with no reverse for the data replicated)

- merge replication (for read/write parts of tables from many databases to many other databases, with reverse)

- snapshot replication for read/write tables from a database to many other databases, with no reverse)

- Oracle replication (special case)

- Service Broker (to communicate data from different databases with different table schemas, with reverse)

- The use of triggers and linked servers (that I do not recommend…)

- The MS ActiveSync (that I do not recommend too…)

Data replication is a feature in which you choose the tables, the rows and the columns in the tables that you want to be replicated, from one database to another one on another instance. So, you can replicate small part of some tables, and SQL Server permits to replicate the execution of stored procedures instead of row values…

Many big websites use transactional replication to increase the data attack surface in order to reach tens of thousands of users simultaneously. This must be combined with DPV (Distributed Partitioned View – another feature that PostGreSQL don’t have). In France, CDiscount, Ventes Privées (aka veepee), FNAC, and many others who are part of the top 10 merchant websites use this functionality on MS SQL server clusters running in parallel …

For the High Availability (not used to replicate some data from some databases to some other), SQL Server has 3 modes:

- Mirroring: per database, a full synchronized (or not) copy of the database to another server (LAN or WAN) with ability to fail over automatically (copies of databases are called « replicas » and cannot be readable not writable). This feature has been deprecated to the profit of AwaysOn Technology, but is still suitable for small use cases.

- Log Shipping: per database, an asynchronous copy of the database to another server (LAN or WAN) without any internal ability to fail over automatically. I must say that PostGreSQL can do that, but in MS SQL Server some assistants help the DBA to do that in less than a minute, with a full coverage of automatic telemetry diagnostics!

- AlwaysOn: per groups of databases (called « Availability Group » or AG) a full synchronized (or not) copy of all the databases in the group to many other servers (LAN or WAN) with the ability to fail over automatically. Copies of databases are called « replicas » and can be set in a readable mode in order to ensure SELECT queries or backup features.

I must say that I have never seen any PostGreSQL high availability system that have automatic failover capabilities, totally transparent for the applications that Microsoft SQL Server have! And some PostGreSQL SQL queries will broke the system (ALTER TABLE, DROP TABLE, TRUNCATE, DROP INDEX, CREATE TABLESPACE… for example).

More, somme commands that Microsoft SQL Server replicates, like creating a new tablespace (called filegroups in SQL Server) with some files to store new tables or indexes, is strictly impossible in PostGreSQL streaming replication.

This generates a lot of replication conflicts, well explained in this paper:

Dealing with streaming replication conflicts in PostgreSQL

MS SQL Server AlwaysOn technology inducing « availability groups » combine failover cluster and streaming transactional based on the binary resulting of the transaction (not on the logical replication of transactions like PostGreSQL do) in synchronous or asynchronous mode in order to:

- assume Business Continuity automatically with some local nodes in synchronous mode and automatic failover;

- assume Business Continuity manually with some local nodes in synchronous or asynchronous mode and manual failover;

- assume Disaster Recovery with some local or distant nodes in asynchronous and manual failover;

- assume extensibility of data access with some local or distant nodes in synchronous or asynchronous mode with databases in a readable setting.

This technology can be set in a divergent way in the Linux version of SQL Server and some nodes can reside in Azure cloud.

AlwaysOn can have actually 8 nodes with a maximum of 3 in synchronous and automatic failover, the others must be in asynchronous and, of course, manual failover.

The automatic synchronous failover of SQL Server via AlwaysOn guarantee that there is no loss of data of any kind , even if you add some new storage to a database (called FILEGROUPs in SQL Server)….. PostGreSQL streaming replication, even in synchronous mode, is not able to guarantee no loss, and some SQL statements cannot be replicated on the slave nodes!

As an example, we have done the AlwaysOn high availability for the worlwide transportation company Geodis with more than 130 databases over 5 replicas (instances of SQL Server) with about 14 To of data (2014).

I remember by the past that, in a pdf document, the PG dba of « Le Bon Coin » was proud to have solved a major failure of the high availability process of PostGreSQL in a few days… but they forgot to mention that the main databases was running without any safety « net » during those same days…

PostGreSQL Streaming Replication also requires some modifications of the database to be able to execute such as the presence of a primary key for all tables … While no prior modification is necessary to implement the Microsoft SQL Server AlwaysOn solution.

Other major differences between PostGreSQL streaming replication and SQL Server AlwaysOn are:

- Automatic seeding is used in SQL Server AlwaysOn solution to automatically send the source database to the secondary instances in order to implement the concerned replicas. No automation of this type of process exists in PostGreSQL streaming replication and this results in a waste of time, especially when large databases must be involved.

- SQL Server AlwaysOn technology can use compression for many operations like seeding or data replication with distant commit. There in no compression of any kind in PostGreSQL for the streaming replication… This results in a heavy traffic on the network when the replication process operates with many databases.

- Encryption is specifically by default for AlwaysOn SQL Server high availability, but PostGreSQL streaming replication cannot cypher the data transmitted… Of course, you can use SSL or VPN… But in some circumstances, an internal and specific encryption is preferable, especially when data are very sensitive like health care or financial…

- PostGreSQL can only replicate all the databases of a cluster. It is impossible in PostGreSQL to replicate only one database in the case of the source cluster have many databases, nor a subset of databases... SQL Server can, not only replicate one database in an instance that hosts many databases, but also a group of database called « Availability Group » that needs to be simultaneously failed over… And finally, SQL Server AlwaysOn offers the possibility to dispatch the different groups of databases to be simultaneously failed over on different targets.

- PostGreSQL can failover only when the PostGreSQL engine crash. There is no possibility for PostGreSQL to failover automatically when a crash occur at the physical level nor the VM or the database… By contrast, MS SQL Server with the Windows Failover Cluster (WFCS) detects all troubles at any level : physical machine, VM, SQL instance or database…

Why PostGreSQL replication is so complicated and limited ?

The technology used in PostGreSQL streaming replication is the equivalent of the transactional replication of SQL Server, that is not used for high availability due to many limitations. It is based on logical not the physical level. Replication at the logical level have some mousetrap. To make it simple, the logical replication of a simple UPDATE that catch the current timestamp will not generate the same value on all the replicas, due to the asynchronous propagation of the logical SQL command… The same trouble appears when generating a UUID to keep as a a key value, even in synchronous streaming replication.

Binary transactionnal replications that copies bytes, have always exact values and at the same time and did not lock at all, because this process does not replicate a transaction but the consequences of the transaction… This subtil point make all the difference!

This was one of the many reasons that Uber shift from PostGreSQL to MySQL… And this is combined with the bad behaviour of MVCC in the replication process…

To avoid those problems, Microsoft SQL Server opted for physical (binary) replication comming with the 2005 version (mirroring), that copies parts of the transaction logs stored as binary values of data, to be lately recorded in target table or index, pages. Thus, it’s guarantee that the values are strictly the same over all the database, whatever the time of the replication is, synchronous or asynchronous and whatever the computed values are, when nondeterministic calculus are runs…

What are the differences in clustering between PostgreSQL and SQL Server? Compare clustering in PostgreSQL vs. MSSQL

Here again the author says false things … SQL Server have no active/active clustering capabilities.

In the facts, there is a possibility to have several instances of SQL Server (the name « instance » refers to the term « cluster » in PG) on one machine and having some in active mode and some other in passive mode (in that case, the SQL Server service of some instances is turned off). With a shared disk that is accessible by all the instances of SQL Server, a crash of one computer is fail over manually or automatically by one another that access the disk share when restarting the SQL Server service instance… But this is an old fashion to do what we call high availability and the most actual way to do this is to use AlwaysOn technology as write in the above paragraph.

Excepting through the merge data replication, there is no way to have a full database that is writable by many instances of SQL server running on different machines. But with AlwaysOn it is possible to have one active database (read/write) and many other replicas readable.

What are the trigger differences between PostgreSQL and SQL Server? Compare the triggers in PostgreSQL vs. MSSQL

Both SQL Server and PostGreSQL supports PER STATEMENT triggers. This is new in PG (2018), but old in SQL Server since almost 1999… But the main difference is that SQL Server triggers can modify directly the data of the targeted table without some « contortion » of the code in order to avoid the mutating table error… PostGreSQL triggers are subject to uncontrolled recursion in this case. SQL Server controls the recursion in two manners: at the database level (for recursion) and at the server level (for re-entrance, e.g. for what I call « ping-pong » trigger).

|

1 2 3 4 5 6 7 8 9 |

CREATE TRIGGER E_IU_CUSTOMER ON T_CUSTOMER_CMR FOR INSERT, UPDATE AS IF NOT UPDATE(CMR_NAME) RETURN; UPDATE T_CUSTOMER_CMR SET CMR_NAME = UPPER(CMR_NAME) WHERE CMR_ID IN (SELECT CMR_ID FROM inserted); |

Here is and example of SQL Server trigger code that forces the uppercase of names in a customer table, each time a name is inserted or deleted.

Both PostGreSQL and SQL Server have DDL triggers, but the way it is implemented in SQL Server is more accurate (about 460 events or event groups on two different levels: server scope – 201 – and database scope – 262), fully usable and uses a simplest code. As an example, forbidding a CREATE TABLE that does not meet certain criteria, is easy in SQL Server and use 6 lines of code:

|

1 2 3 4 5 6 7 |

CREATE TRIGGER E_DDL_CREATE_TABLE ON DATABASE FOR CREATE_TABLE AS IF EVENTDATA().value('(/EVENT_INSTANCE/ObjectName)[1]', 'sysname') NOT LIKE 'T?_%' ESCAPE '?' ROLLBACK; |

… but in PostgreSQL, you needs two objects and to code 20 lines with a more complex logic :

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

CREATE FUNCTION e_ddl_create_table_func() RETURNS event_trigger LANGUAGE plpgsql AS $$ DECLARE obj record; BEGIN FOR obj IN SELECT * FROM pg_event_trigger_ddl_commands() WHERE command_tag in ('CREATE TABLE') LOOP IF NOT (parse_ident(obj.object_identity))[2] LIKE 't?_%' ESCAPE '?' THEN raise EXCEPTION 'The table name must begin with t_'; END IF; END LOOP; END; $$; CREATE EVENT TRIGGER trg_create_table ON ddl_command_end WHEN TAG IN ('CREATE TABLE') EXECUTE PROCEDURE e_ddl_create_table_func(); |

In SQL Server, when you have multiple triggers for the same object and the same event, you can control the order the triggers fires (sp_settriggerorder). In PostGreSQL the order the triggers fires is fixed by the name (in alphabetic order)! Not quite easy when multiple contributions must set their own triggers (remember, many tools use and abuses of triggers, because it is the only way to capture data changes in PostGreSQL). By contrast, most of the features of SQL Server that require tracking down a change in data uses the transaction log, which is much lighter than using a trigger…

What are the query differences between PostgreSQL and SQL Server? Compare the query in PostgreSQL vs. MSSQL

Here too the author omits elements of comparison…

SQL Server can also add to standard SQL, advanced types and user-defined types, extensions and custom modules, and additional options for triggers and other functionality, by coding those topics in a .net language (C#, Python, Ruby, Scheme, C++…).

And the support a JSON is include natively.

But… PostGreSQL has no MERGE statement (which is a part of ISO SQL Standard).

What are the full-text search differences between PostgreSQL and SQL Server? Compare full-text search in PostgreSQL vs. MSSQL

The author says that « SQL Server offers full-text search as an optional component » which is a lie…. FullText indexing is a full part integrated in SQL Server by Microsoft since the 2008 version of SQL Server!

PostGreSQL indexing is completely proprietary while SQL Server acts in the ISO standard SQL way with the CONTAINS statement.

But SQL Server have some more features that PostgreSQL does not have:

- indexing a great amount of different file format (all the MS office type of files plus .txt, xml, rtf, html, pdf…)

- indexing documents stored as files with the FILESTREAM/Filetable storage

- using semantic indexing

- indexing meta tags in electronics documents

- searching synonyms has no limitations like PG has

- easy searching of expand or contracted terms (acronym)

What are the regular expression differences between PostgreSQL and SQL Server? Compare regular expressions in PostgreSQL vs. MSSQL

It is true that MS SQL Server has no natively regex support, this was done on purpose for security reasons (essentially because of DOS attacks…), but:

- the LIKE operator has some REGEX functionalities ( […], ^…);

- the sensitivity (or not) for characters strings like case, accents (and all diacritics characters like ligatures) kanatype, width (2 = ² ?) can be set while using the LIKE operator combined with a collation, which is required in the standard ISO SQL;

- REGEX full functionality can be operated by adding a .net DLL written in C# by Microsoft as an UDF (User Defined Function)

But, and this is very worrying, the combination of a case-insensitive collation (ICU collation in PostGreSQL) and the LIKE operator, consistently causes a particularly astounding error (ERROR: nondeterministic collations are not supported for LIKE ...) which makes such search unusable!

I don’t know what is the concept of « nondeterministic » collation, because a collation is a simple surjective mathematic application between two sets, one containing the original chars and the second the transformed chars… But the result is in facts that PostGreSQL does not have fully feature to retrieve information when you need case or accent insensitive searches!

But the main reason for the lack of a built-in function, like the standard requires (SIMILAR), is that it facilitates denial of service attacks! Having a standard function known to everyone is a catastrophic entry point that makes attacking PostgreSQL servers easier by SQL injection! In SQL Server, via the CLR.net DLL that give the regex features, you can create a user defined function with a customized name… which is more difficult to find for a hacker. The PG documentation is also clear about that point….

Problem you cannot remove easily all the regex stuff of PG like SIMILAR, ~, ~*, !~, !~*, substring, regexp_replace, regexp_split_to_array. Trying to remove the regex part of the substring function, is just a nightmare… Of course, you can do it because you can get the source code…. But how many years will it take to do so?

What are the partitioning differences between PostgreSQL and SQL Server? Compare the partitioning in PostgreSQL vs. MSSQL

One major difference between PostGreSQL and Microsoft SQL Server in the topic of partitioning, is that, the partition mechanism is generic and complete… Resulting in saving, time, money and security!

When you are creating partitions in PostGreSQL you have to create one new table (inherited) for each partition of each table… If you have 30 tables and need to divide the data by month over 3 years, you need to create 1080 inherited PostGreSQL tables! What wasted time….

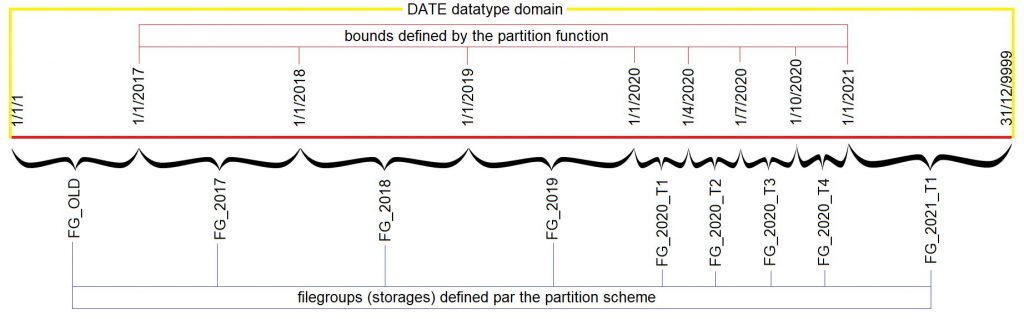

In SQL Server partitioning system, there is only two objects to create to manage all the partitions you want on all the targeted tables:

- a partition function (CREATE PARTITION FUNCTION);

- a partition schema (CREATE PARTITION SCHEME);

Finally you must do an alter table for each tables or indexes involved in the partitions to indicate that they have to store the rows in the partitioned system when they don’t have been stored in partition.

Adding a partition in SQL Server for all the tables of your partitioned data, results by launching two single commands (ALTER PARTITION SCHEME…, ALTER PARTITION FUNCTION…).

For instance, I have to create the Operational Data Store of E.Leclerc accounting (which is an equivalent of Walmart in France) and we decided to partition about 30 tables by store, and with about 300 stores, the result is near 9000 units of storage… This has been taken only 10 minutes to do!

As an example, consider the orders made on a website that we want to partition by date:

- For recent invoices, with a partition per quarter up to year n-1 compared to the current year.

- For old invoices (years n-2 and even older), a partitioning per year will be carried out.

This is summarized by the following figure:

PostGreSQL solution’s based on Oracle way of partitioning has several gotchas, because the partition mechanism is based on designing intervals:

- You can « forget » some values of key partitioning.

- You can create partition overlapping

SQL Server solution systematically offers continuity of partitioning, so there are no possibilities to forget some key partitioning values or to have an overlap between two partitions….

By the way, let’s talk Ted Codd, the Relational DB creator, about partitioning…

Rule 11: Distribution independence: The end-user must not be able to see that the data is distributed over various locations. Users should always get the impression that the data is located at one site only.

What do you think of this rule in relation to the fact that, in PG, you need to design new tables to create partitioning? A table is a logical location of data!

Some more problems remains in PostGreSQL solution:

- PostGreSQL does not allow to partition an existing table. This is a severe limitation, because you cannot always knows, when designing the database, if this or that table must be partionned. Usually you have to wait for the growth of the database size!

- PostGreSQL cannot create global indexes on partitioned tables. Those indexes are widely used, because many queries does not have the partition criteria in the WHERE clause…

- PostGreSQL cannot create UNIQUE constraints on partitionned tables. Because the pseudo partitionned table is not a table but a collection of table it’is impossible, by definition, to have a « transversal » unique constraints that checks all the rows of all the child table… Read…

- PostGreSQL does not offers support for « splitting » or « merging » partitions using dedicated commands;

- PostGreSQL cannot make a partition non-writable (as an example for archive purpose);

- PostGreSQL cannot compress a partition (as an example for lukewarm data).

What are the table scalability differences between PostgreSQL and SQL Server? Compare the table scalability in PostgreSQL vs. MSSQL

The author forget to say that SQL Server has a system called Data Partitioned Views (DPV) that allow horizontal partitioning and give a way to run parallels instances of SQL Server to offer a wider attack surface for the data. As an example, fnac.com (our « French » amazon) uses a farm of servers running simultaneously.

But this topic must rely to the paragraph « What are the replication differences between PostgreSQL and SQL Server? Compare replication in PostgreSQL vs. MSSQL »

What are the compliance differences between PostgreSQL and SQL Server? Compare the compliance in PostgreSQL vs. MSSQL

The author seems to indicates that SQL Server has no compliance for HIPAA, GDPR, and PCI. in the facts SQL Server has a higher level of compliance for many of theses requirement.

As an instance, I would mention fourth features that PostGreSQL don’t have and which are very great for securing the databases:

- Transparent Data Encryption : cyphering the data in the files (tables, indexes and transaction log (even in tempdb which is used for temporary tables) and of courses all the backups done on the database

- End-to-end encryption: allows clients to encrypt sensitive data inside client applications and never reveal the encryption keys to the Database Engine

- Extensible Key Management: encryption keys for data and key encryption are created in transient key containers called HSM residing outside the machine in a secure electronic device called HSM (Hardware Security Module)

- Dynamic Data Masking: limits sensitive data exposure by masking it to non-privileged users.

There are many more features that I can talk about for this topic that make the difference, but those based on encryption features are the most sensitive ones…

What are the NoSQL capability differences between PostgreSQL and SQL Server? Compare the NoSQL capabilities in PostgreSQL vs. MSSQL

By omitting some crucial information, the author is lying about the ability to do NoSQL…

First SQL has JSON features exactly like PostGreSQL.

As I said, earlier :

- Second, Microsoft SQL Server have graph table that PostGreSQL don’t have

- Third, Microsoft SQL Server have « in memory » table that PostGreSQL don’t have

- Fourth, Microsoft SQL Server have « big table » up to 30000 columns and vertical indexing (columnstore index) that PostGreSQL cannot… Plus SQL Server Big Data clusters…

- Fifth, Microsoft SQL Server can create « key value » pair table (in memory) that PostGreSQL don’t have

So PostGreSQL has very few NoSQL features, only JSON… While SQL Server has the major NoSQL features !

What are the security differences between PostgreSQL and SQL server? Compare the security in PostgreSQL vs. MSSQL

There are some important differences between PostgreSQL and Microsoft SQL Server in the matter of security.

First PostgreSQL has never been designed in though by dissociating the security level of the operations on the « cluster » (such as creating a new database, controlling the connections, backuping…) and the commands at the database level.

As an example, a backup (that does not exists in PostGreSQL which does a dump…) can be controlled at the database level, but in PG, is outside the scope of the database, and also outside the security of the PG cluster! Conversely SQL Server use a security entity named « connection » for the instance operations, and another entity security named SQL user at the database level, in which a connection can be linked to only one SQL user in each database.

The complete list of SQL server’s privileges at all levels can be view on a poster in pdf format. As you can see, the sharpness of privileges in SQL Server has no comparison with the coarse privileges in PG.

I must say that PostGreSQL has some efforts to do in the matter of security:

- CVE-2019-10164 at level 9.0 shows that an attack by elevating privilege is possible

- CVE-2019-9193 at level 9.0 show that COPY TO/FROM program allows superusers to execute arbitrary code

- CVE-2018-16850 at level 7.5 is vulnerable to a to SQL injection in pg_upgrade and pg_dump

And SQL Server database engine is well known as the most secure database system… No CVE at level over 6.5 (critical) has been discover in the past 12 months…

What are the analytical function differences between PostgreSQL and SQL server? Compare the analytical functions in PostgreSQL vs. MSSQL

The author forgot to mention that: DENSE_RANK, NTILE, PERCENT_RANK, RANK and ROW_NUMBER that SQL Server also have!

What are the administration and GUI tools differences between PostgreSQL and SQL server? Compare the administration with GUI tools in PostgreSQL vs. MSSQL

The author forgot to mention that: DBeaver, Squirell, Toad… are also administrative tools available for SQL Server and monitoring health and performance tools includes Nagios, Solar Winds, Idera, Sentry, Quest, RedGate, Apex…

What are the performance differences between PostgreSQL and SQL server? Compare the performance of PostgreSQL vs. MSSQL

The author says that « because the SQL Server user agreement prohibits the publication of benchmark testing without Microsoft’s prior written approval, head-to-head comparisons with other database systems are rare ». In facts such clauses in contracts are « leonine » clause and are not applicable, because they are against the laws, especially in the fields of human rights and of the freedom of expression.

So, you can publish benchmarks (I have published some’s) but, you must be fair in your comparisons, and it is the case when you use an approved benchmark like the ones that are available in the TPC org.

What I see, is that Microsoft has published a dozen of results for those benchmarks, and the current benchmark for OLTP database performances is the TPC E. But I have never seen any results for PostGreSQL of any kind, maybe because of the fear to be ridiculous…

Think about one thing… SQL Server database engine runs queries automatically in parallel mode since the 7.0 version (1998) and in practice, almost all the operations (over 100 types actually) in a query execution plan can be parallelized…. In PostGreSQL only 4 types of operations can use a multithreading internally which seems to be limited at 4 threads…!

With the help of « in memory » optimized table and columnstore indexes (vertical index), we are light-years away in terms of performances between SQL Server and PostGreSQL…

Ask yourself why no PostGreSQL OLTP benchmarks results have been published in the TPC.org…

What are the concurrency differences between PostgreSQL and SQL Server? Compare concurrency in PostgreSQL vs. MSSQL.

The author confuses about MVCC in SQL Server. Let’s talk about real facts.

Concurrency of accessing data is done by the standard ISO SQL isolation level that require 4 levels:

- READ UNCOMMITTED (reads data ignoring locks)

- READ COMMITTED (read data that have been committed)

- REPEATABLE READ (re-read data consistently)

- SERIALISABLE (avoid any type of transaction anomalies)

In the past, for very opportune reasons, some RDBMS vendors has claimed that those isolation levels are inefficient, because they need to locks data in pessimistic mode (locks are placed before reading or writing) and this mode is subject to data overcrowding.

So some vendors decided to use an optimistic mode of locking, that take a copy of the data before doing anything to be out of the scope of concurrent accesses, the time to read and write data… But this technic, sued in Oracle and copied by PostGreSQL in not the panacea…

- First is does not guarantee that your process will reach the end… If someone has modify the data you wanted to modify also, you loose your work! (« loss of update » transactional anomaly)

- Second, copying the data needs to have some more resources than working on the original data.

Finally, the technical solution chosen for the MVCC of PostGreSQL (for duplicating rows) is the worst of all the RDBMS…

- in Oracle, the transaction log (undo part exactly) is used to reads the formerly rows. No more data are generated for this feature, but the remaining part of the transaction logs can growth up if a transaction runs a long time;

- In SQL Server the tempdb database is used to keep the row versions. Some more data, out of the production database is generated and quickly collected (garbage’s) to liberate space. The tempdb system database has a particular behavior to perform this work. Recently (2019) SQL Server add the ADR/PVS mechanism to accelerate recovery and when this feature is activated, the row versions can be stored in specific files relative to the database.

- In PostGreSQL, all the different versions of the table rows are kept inside the data pages of the table and reside in memory, when data are caching, then is written on disks, causes table bloats and by consequent memory pressure, until a mechanism call VACUUM proceed to sweep of the obsolete rows.

This VACUUM process is well known to be a plague, and generated locks and deadlock a lot! Some customers end up stopping the data service in order to perform such maintenance, which in practice, is not compatible with large, highly transactional databases and 24h/24 operational state. Even with the recent parallel vacuuming that I suspect to cause even more deadlocks .

SQL Server is known to be the only RDBMS to have simultaneously the 4th transaction isolation levels and can be set in pessimistic of optimistic locking. Pessimistic mode offers the advantage of taking less resources and guarantee the finalization of the transaction, with the disadvantages of waiting because of blocking.

PostGreSQL doesn’t have the possibility to use the 4 transaction isolation levels and do only the optimistic locking in the scariest way…

And the worst of all is that for an UPDATE, PostGreSQL act as a DELETE followed by an INSERT…

What are the environment and stack differences between PostgreSQL and SQL server? Compare the environment and stack in PostgreSQL vs. MSSQL

The author seems to want to confine SQL Server to the Microsoft world, which in fact is not true.

- First, SQL Server came into the world of LINUX there in 2017.

- Second, SQL Sever is very used with LINUX Apache, Java and PHP clients.

- Third, SQL Server runs, in multiples clouds (Amazon, Azure…), multiple virtualizers (HyperV, VMWare…) and Docker.

- Fourth, SQL Server has integrated Python and R languages as a full part of SQL Server (that PostGreSQL did not…)

What are the 40 hidden gems that SQL Server have and PostGreSQL do not have ? Compare the lack of PostGreSQL vs MSSQL

This paragraph is of my own! of course paid in a way or another by EDB do not write such a malicious topic…

1 – Compliance to the SQL standard

PostGreSQL has claim by the past to be the RDBMS the most compliant to the SQL ISO standard. It was perhaps true… at the old times! But actually no.

The most conformant RDBMS are DB2 and SQL Server!

Some example must be done:

- SQL identifier must have 128 characters length. This is true in SQL Server. Not in PostGreSQL is limited to 63 characters length for SQL names.

- SQL ISO standard use in the ORDER BY clause an OFFSET … FETCH while PostGreSQL use a LIMIT … OFFSET!

- Pessimistic locking mode is the default standard (and READ UNCOMMITED must be able to be activated). This is true in SQL Server. Not in PostGreSQL that only do optimistic locking.

- MERGE SQL statement has been specified since the 2003 version of the SQL standard and is a part of SQL Server, but had never been released in PostGreSQL.

- Temporal tables has been defined in the 2015 version of ISO standard SQL and they are available in SQL Server since the 2017 version. PostGreSQL use a an abominable ersatz, unusable: none of the SQL temporal operator has been implemented…

- SQL standard make a big distinction between PROCEDUREs and functions called UDF (User Defined Function) in the SQL standard. MS SQL Server respects it while PG confuses procedures and functions, that causes a great lack of security (a user can create a UPPER function that discards data because of the overloading code strategy…).

- Creating a trigger needs a single statement in the standard and SQL Server too, but in PostGreSQL you have to code 2 distinct objects.

- The DataLink ISO SQL standard way to store files under the control of the database had never been provided in PostgreSQL, while SQL Server had it since the 2005 version un the name of FILESTREAM.

- And probably the most confusing problem is that some PostgreSQL functions have the same name as a standard function but does not at all do what the standard provided for.

As developments progress, PostGreSQL moves further and further away from the SQL standard, making portability more difficult …

2 – Stored procedure that returns multiple datasets

One feature I like very much when designing a website is the ability of the Microsoft SQL Server stored procedures to returns multiple result sets in only one round trip… If you consider performances, you will see that the major part of consuming time is due to round-trips. Reducing drastically the number of round-trips results in better performances and reduces contention and of course deadlocks…

There are no possibilities in PostGreSQL to have PG/PL SQL routines that returns many datasets to the client application…

MARS (Mutiple Active Results Sets) is another feature that PG does not have and allows applications to have more than one living request per connection, in particular you can execute other SQL statements (for example, INSERT, UPDATE, DELETE, and stored procedure calls) while a default result set is open and can be read.

3 – Automatic missing index diagnosis

SQL Server is the only RDBMS to provide a full diagnosis of missing indexes. This is the case since the 2005 version! It is therefore possible to improve performance very quickly. I remember that at one of my clients we divided by 20 the average response times of queries in less than half a day by creating a hundred indexes where SQL Server had diagnosed more than 300 missing!

There is no index diagnosis tool in PostGreSQL and you must enable a tracking tool to record query performances, then analyze manually thousands and thousands of queries and try to create the most relevant index on every case. Such a work is very time consuming and the productivity is very low and frustrating for the DBA!

4 – Storing file as « system » files under the RDBMS control

Another feature I like very much is the concept of FILESTREAM widely use for SharePoint. This is a standard ISO SQL feature called DATALINK(SQL:1999), rarely implemented in DBMS…. FILESTREAM allows you to keep the files that you wanted to store in a database, in the OS file system (out of the table), but under the transactional responsibility of the RDBMS! This makes it possible to always have the files (like pictures, pdf and so on) and the data of the tables in a synchronous manner, and in case of rollback of the transaction, the insertion of the adjoining file is also invalidated. In the event of a backup, even for partial backups that are entirely possible, the FILESTREAM files are collected in the backup process and restored during the restore without any loss, which no RDBMS today guarantees, not even Oracle!

One extension of FILESTREAM is the FileTable concept that is a two faces mirror of the Windows file system… Creating a FileTable at the entry point of the Windows file system, causes a dual interface for the files stored: one face will manage files in Windows the other will manages files in SQL Server!

SQL ISO DataLink feature or similar does not exists in PostGreSQL.

5 – A transaction logs for each database

Microsoft SQL Server use one transaction log per database. If you have 100 databases, you have 100 different transaction logs plus some transaction logs for the system database, especially for tempdb database that is used for temporary objects (tables…). And if you use FILESTREAM, a special transaction log is added only for the transacted input/output of the files stored under the control of SQL Server…

PostGreSQL use only one transaction logs for all the concurrent databases inside which all the transaction is written. This result in contention when accessing disk IO to write the transaction, which is a heavy brake for performances. Many other problems occur because of this behavior in backup processes, log shipping and streaming replication when there is a lot of databases in the PG cluster…

6 – Parametric read-write views

Online table’s UDF (User Defined Function) does not exists in PostGreSQL. Those type of functions are literally « parametric views », and can be used to read data with some function arguments, but also to write (INSERT, UPDATE, DELETE) the underlying tables.

They are defined such as :

CREATE FUNCTION MyOnlineTableFunctionName ( <list_of_variables> )

RETURNS TABLE

AS

RETURN (SELECT ... )

7 – Database snapshot

Sometime you need to have a readable copy of a database, containing the values of the data at a specific time. This is possible with the concept of the « database snapshot« . Creating such object is easy and immediate, whatever the database volume is.

Database snapshot does not exist in PostGreSQL.

As an example, the purpose is for enterprise reporting at a stable time or developer’s tests that wand to compare values before and after a big batch process, and can restore the « before » value with a RESTORE FROM SNAPSHOT.

8 – Temporary tables

In SQL Server, all the temporary objects and of course temporary tables, are created in a dedicated database called tempdb. This system database gain to be stored separately on a very fast storage device like Intel Optane or NVMe or, to get even more performance in the form of « Memory-optimized tempdb metadata », provided you have enough RAM.

This database is especially designed for very fast transactions and data movements:

- parallelism is systematically active and storage reside on multiple files;

- a specific garbage collector does not erase too fast unused tables in case of reuse.

PosteGreSQL does not have a special DB to do that but you can specify a distinct path to store temporary objects with the command:

|

1 |

alter</code> <code class="sql plain">system </code><code class="sql keyword">set</code> <code class="sql plain">temp_tablespaces = </code><code class="sql string">'...my path for temp table...' |

Of course, PostGreSQL does not have « in memory » feature, even for temp tables…

9 – Temporal table…

The concept of temporal table (not to be confused with temporary tables) as been fully specified by the ISO SQL Standard and consists to add:

- timing intervals for transaction periods to the rows of the table (2 columns with UTC datetime that can be in a hidden mode, e.g. the column will not appear when querying with SELECT *)

- a history table in which you will find older values of rows (after every update and delete)

- many operators (AS OF …, FROM … TO …, BETWEEN … AND …, CONTAINED IN …., and finally ALL) to retrieve values at different points or periods of time.

History table can be created in a « in memory » table in SQL Server. If not, all the data in the temporal table is stored in a compressed mode.

The solution that PostGreSQL offers for temporal table has many drawbacks:

- the design of PostgreSQL temporal tables has nothing to do with the SQL standard…

- In PostGreSQL, temporal table uses triggers to generate all this stuff, resulting in poor performances and many locks;

- PostGreSQL has no data compression mechanism to save data volume and execute query faster when you query the history table;

- the interval, that is not compound of 2 datetimes is hard to index and results in poor performances when queried;

- hidden the interval columns is not possible in PostGreSQL, so you cannot add it in software vendor’s databases;

- None of the temporal SQL operators has been integrated into the code….

In fact PostGreSQL temporal solution is a ridiculous ersatz of what you can have with the standard, without performance and is strictly unusable!

Another manner of dealing with temporal information is the one by Dalibo called E-maj… This contrib, close to the Oracle concept of flashback queries (but enrolling multiple objects), use some heavy triggers and costly logging tables, and does not support any schema change! Off course it is also far away from the standard SQL way of doing it…

Regarding Microsoft, SQL Server stores history table in a compressed way with a special index type called TSB-tree optimized for temporal multiversion data.

10 – XML

The implementation of XML in SQL Server is a gemstone! In PostGreSQL XML functionalities are poor and offers paltry performances

First you can reinforce the XML type of documents stored in an XML column by a XML schema collection. Each element of a XML collection is a XML schema doc, that define the XML content of document to be stored. If no corresponding XML schema is found in the collection, the XML document will be rejected like any other SQL constraint! There is no way to add constrained XML documents in PostGreSQL…

This type of constraint speed-up the information retrieving because it can translate path expressions into efficient SQL, even in the presence of recursive XML schemas.

Second, dealings with XML in table’s columns is easy because SQL Server use XQuery, XML DML and XPath2 with five methods (query, value, exists, modify, nodes). PostGreSQL only use XPath1, which is a severe limitation to manipulates XML data.

Third, modifying a data inside a XML document stored in a table, is easy with the modify method, that only changes the appropriate information and not the all content of the XML document as PostGreSQL do!

Fourth, PostGreSQL has no way to index XML stored documents… Indexing XML document is one of the most achieved feature in SQL Server, and one of the most simple!

You first need to create a primary XML index that serves as a « backbone » for other indexes and already provides significant gains in access. You can then choose to implement one, two, or three of the other specialized indexes for PATH, for VALUE or for PROPERTY…

The Microsoft research paper on this topic is available at these URLs:

https://ecommons.cornell.edu/bitstream/handle/1813/5661/TR2004-1961.pdf;j

https://www.immagic.com/eLibrary/ARCHIVES/GENERAL/MICROSFT/M040611P.pdf

Tell my why the PG staff cannot apply such technics in the PostGreSQL relational engine?

And finally, XML document inside a table column stored as XML or BINARY datatype, or outside the table via FILESTREAM, can be fulltext indexed…

11 – Trees stored in path format

SQL Server comes with the hierarchyId datatype which allows to store a tree in path mode and publishes manipulation methods to do so. No such tree facilities exist in PostGreSQL except the ltree contrib which is in its infancy and is roughly 15% of what SQL Server is doing about it.

To be honest, hierarchyId is not my favorite solution. I prefer to store trees as intervals, which is clearly the fastest way to do so.

12 – Custom aggregate function

The reason why you cannot define aggregate function directly in Transact-SQL is that SQL Server cannot parallelize the execution of UDFs. So, to empower the running of such functions, they intelligently decided to allow the creation of theses objects in SQL CLR (.net), with some pre programmed methods to facilitate the multithreading code.

Splitting the function execution in multiple threads is done by the « Accumulate » method, then the « Merge » method finally merges the multiple results of the different threads into a single one.

There is no way to create custom aggregate function that executes in parallel in PostGreSQL…

13 – Dynamic Data Masking

This SQL Server integrated feature allow you to masks data with various functions which is a great use for the GPDR. PostGreSQL does not have any free dynamic data masking functionnality, but there is a project call PostGreSQL Anomymizer which is at an early stage of development and should used carefully. Also paid versions exists, like DataSunrise Data Masking for PostgreSQL or in the DBHawk tools.

14 – LOGON trigger

A logon trigger is very useful when you want to control who accesses the instance (cluster in PG words) and the database and also to limits the number of users using the same login.

Logon triggers is embryonic in PostgreSQL.

More precisely, there is a contribution, with some drawbacks, like the needs for a systematic public permission… What a lack of security!

15 – Impersonate

Microsoft SQL Server has two levels of impersonation: at the session level and at the routine level (procedures and triggers). PostGreSQL has only a session impersonnalisation but PostGreSQL cannot impersonate the execution of a routine which is a great feature to trace the user activities with many technical metadata when usually there is no grant to the user to access this information especially for use under the GDPR. Also this feature is a great help to code routines (procedures, triggers..) wich some extra privileges that, ordinary, the SQL user does not have. In this way, the codet is closer to what we do in object oriented languages…

16 – Service broker, a data messaging system

While application have SOA (Service Oriented Architecture), which means service running and cooperating by sending messages, this architecture can be set down to the database layer… That’s SODA (Service Oriented Database Architecture)…

Such a system is coded in SQL Server under the name of « Service Broker » is made of queues (which are specific tables), services (to send and receive messages), conversations (to manage a thread of requests and responses)… Off course to manage it over HTPP, you have to deal with HTTP endpoints, contracts, routes and to encrypt your data…

There is no equivalent to Service Broker in PostGreSQL to transmit data messages from an cluster to one another with routing, serialization and transaction.

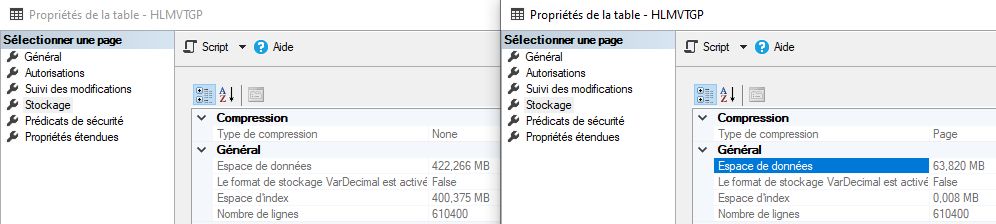

17 – Data and index compression

Another very unfortunate lack in PostGreSQL is the absence of data and index compression. SQL Server have several modes for compressing data:

- Table/index compression with two modes: rows or pages

- Using columnstore index, that can compress the whole table (clustered columnstore index) or only one index (about 10 times less volume)

Of course, compressed index does not need to be decompress to seeks the data.

18 – Resumable indexes

When dealing with very huge tables (hundreds of Gb…) one big problem is the duration of indexing such tables. Even if you are in an ONLINE mode to create indexes, some indexes can take a very long time to do. If the instance is overloaded at sometimes, you will need to cancel the CREATE INDEX process.

In SQL Server there is another intelligent way to process such a problem, called « resumable indexes »…

- First you need to create the index with the ONLINE and RESUMABLE options.

- Second, at any time you can stop the CREATE INDEX and preserve the work done with the command that ALTER INDEX … PAUSE.

- Third you can restart the CREATE INDEX from the point is was, with the command ALTER INDEX … RESUME.

Another possibility is to create the index with the MAX_DURATION options. When the CREATE INDEX command exceed the amount of time specified resumable online index operation will be suspended.

There is nothing equivalent like the resumable index operation in PostGreSQL.

19 – Page restauration

Before restoring the whole database, in the case of some damaged pages, you can try to recover those pages by retrieving them into the different backups. This operation is feasible via the BACKUP command. In Enterprise version, this process cans be executed in the online mode.

When using the Mirroring/ AlwaysOn high availability system, the damaged pages are automatically repaired…

PostGreSQL does not have any possibility of page repair! When you lose some data in a table page, you are condemned to restore the full backup…

20 – Intelligent optimizer (« planer » as they call it in PG)

Some more extraordinaries features have been definitively appointed in SQL Server 2019 for the database engine performances under the term of Intelligent Query Processing:

- Scalar UDF Inlining: scalar UDFs are automatically transformed into scalar expressions or scalar subqueries that are substituted in the calling query in place of the UDF operator. The result is a spectacular gain of performances for some classical UDF!

- Memory grant feedback: when the amount of reserved memory for a query has been over evaluated, the optimizer reduces the size the next time a similar query will be executed. The result is a gain of cache in memory for much more data and client operations.

- Batch mode on row store: usually algorithms in the execution plan of a query are reaching rows one by one. In batch mode the qualified rows are retrieved in a single step that involve thousand rows. The result is an important gain of performances on big tables.

- Table variable deferred compilation: due to the optimization process a table variable cannot have a correct cardinality estimation. For instance, PG estimate to 100 the rows that will be in such object. From now on SQL Server stops the execution of the query just before filling the table variable, and readjust the remaining estimated query plan to be accurate and much more performant that the formerly first estimated plan. The result is an increasing gain of performance when you use table variable.

- Adaptive joins: in some cases of heavy volume of data, where joins cannot be estimate in an accurate manner, an adaptive join is created, constituted in fact in two joins algorithms, and the final choice of the algorithm to joins the two datasets will be choose after a first read done in the smallest table (in terms of cardinality). The result is a faster join technic in some heavy queries.

- Automatic plan correction : When a plan regresses due to plan changes, previously-executed plans of the same query are often still valid, and in such cases, reverting to a cheaper previously-executed plan will resolve the regression.

All those features combined with compression, vertical indexing (columnstore index) and automatic parallelism, give to the SQL Server engine, the most powerful RDBMS of the world (even Oracle does not use vertical indexes for OLTP workloads).

As an information, PostGreSQL only have 3 join algorithms where SQL Server has 5 (nested loop, has, merge, union and adaptive…).

At the opposite, the PostGreSQL query « planer » (why don’t you say query optimizer? Because it does not optimize at all?…) reveal that it is unable to do a good job, when queries have a great number of join or subqueries. Why? The optimization rules of the PG planer are heavy consumer of resources (exhaustive-search) and a threshold has been defined (default value is 12 joins) to switch to a more sophisticated algorithm called GEQO… but the PG staff have emitted some criticism about there own solution (GEQO): « At a more basic level, it is not clear that solving query optimization with a GA algorithm designed for TSP is appropriate« …

Facing the facts, optimizing complex queries in PostGreSQL is a nightmare!

Even with classic queries the PostGreSQL optimizer can be faulted due to poor MVCC (Multi Version Concurrency Control) handling. In this user case from StackExchange :

Postgresql not using index on WHERE IN but works with WHERE =

We see that, when the subquery is introduced by the equal operator the PG planer use an index. And when the operator is a « IN » no index is used… But after cleansing the phantom records left in the data pages with the VACUUM process, index is used in both cases… Of course, it is impossible in a production environment to have fun triggering the « VACUMM » process all the time in the hope of obtaining an adequate execution plan!

Much more stupid, PostGreSQL optimizer (pardon, « planer ») is unable to understand that a constant doesn’t change its value when running the query… As a test, you can compare that the time consumed for a SELECT COUNT(*) and a SELECT COUNT(true) – and the last one is using a constant – reveal that the second form of the query runs longer ! All other RDBMS detects he constant and run the query with the same duration for both patterns.

21 – Query hints

Query hints: even if it is bad (and really I think so!) the possibility of using SQL query hints to impose an algorithmic strategy on the optimizer is clearly a good transitive solution to solve difficulties when the optimizer goes wrong… It is also a solution offered by the paid version of PostGreSQL delivered by EDB!

SQL Server offers two different hints: table hints and query hints.

The proponents of free PostGreSQL categorically reject this approach, but the presence of hints in EDB makes me think that it is more by protection of a market than by stupidity …

In SQL Server there is many ways to deal with query hints:

- adding hints directly in the SQL text of the query;

- adding a « plan guide » that adds the hints directly in the SQL text of the query on the fly (very interesting when you have an application coming from a tormented editor…);

- some hints can also be enabled at the database level or the instance level (Trace Flag);

- analyzing bad and good plan versions of the same query and decide to chose the most improved, even with hints, which in SQL Server is the role of Query Store.

In PostGreSQL there is only a possibility to enable some hint at the session or the cluster level, and this approach has severe limitations: no way to enable the hint for one query and not the other, no way to add a query hint on the fly, which is the only way to correct bad plans when they are in a vendor application!

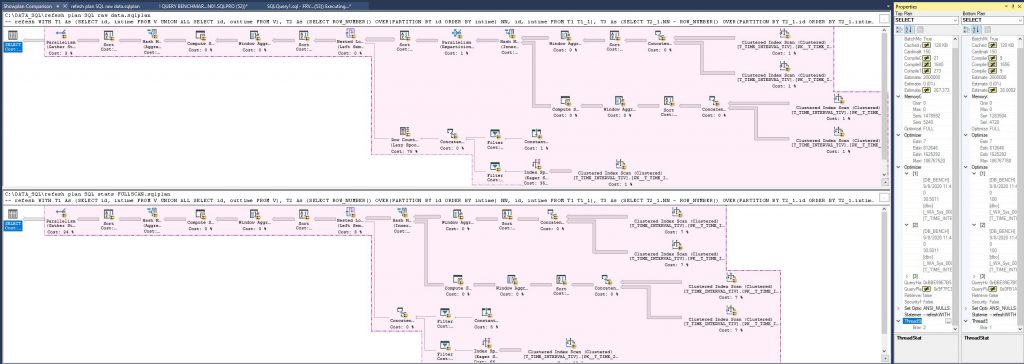

22 – Query plan analysis

Analyzing an execution plan for a query can be done in SQL Server by the Query Plan visualization and the plan comparison tool.

The pauper tools that offers PostGreSQL for visualizing and comparing query execution plans are iron age dated, comparing to what SQL Server Management Studio (SSMS) is able to do!

Not only can you see in what step of the execution plan your query is, but SSMS can also give you the differences between two similar execution plans.

Some full addons like SentryOne Plan Explorer (free tool) reveal quickly the bottlenecks of query plan by a color code that is dark red for a heavy step…

Another possibility is to see, the query execution plans running with « Live Query Statistics« :

Query execution plans running with « Live Query Statistics » (with the courtesy of Manoj)

This is very useful when you are executing very long queries and wanted to know what is the steps actually running…

23 – Policy based management

If you want to automate some policy of management you can use this feature to make a continuous or scheduled check of rules that applies to some « facets » (instance, database, table…) of SQL Server. As an example, you can be informed, daily, if there is a database, newly created, that has not been backuped.

There is no tool in PostGreSQL that facilitate the implementation of management rules for the DBA.

24 – managing data quality

SQL Server have two distinct modules to manage data quality:

DQS (Data Quality Services)

MDS (Master Data Services)

There is nothing to manage data quality into PostGreSQL.

25 – Change Data Capture

Many corporate OLTP databases require that certain data to be transposed into an ODS or data warehouse. To identify the lines that have been inserted, modified or deleted during the time necessary to refresh the DW or the ODS, it is necessary to have a reliable tool so that the target base is only supplied by a data differential rather than a total reconstruction…

SQL Server has two tools for this:

- Change tracking

- Change Data Capture

PostGreSQL does not have any built-in similar tool to do that, so you doom yourself to rebuild your DW or your ODS from scratch!

Of course, you can use a tiers software to do it (Kafka as an example).

26 – Transparent Data Encryption

One major feature to deal with GDPR is the Transparent Data Encryption. This feature encrypts the whole database (date files and transaction logs, and to avoid any lack in this process, encrypt the tempdb database too). So, stealing any part of the database, even a backup, won’t do any good… This system has a very few performance impacts on the data process, because the encryption is made only when physical IO are needed. To do that, you need to manage internally (inside the RDBMS) the IO executed on the file, that PostGreSQL don’t.

PostGreSQL does’nt have anything such as Transparent Data Encryption.

27 – Database/server Audit

There is one possibility of database auditing in PostgreSQL (pgaudit). But the limitations to the database scope (no full server scope) and the fact that it is not possible to filter on columns for tables or view, nor on user account, produces an astronomical amount of data in which you will spend hours to find what you want.

Also the database audit can be stopped by a malicious strategy (e.g. filling the storage disk where reside the audit file to stop the audit process) while PostGreSQL continue to serve the data. On the contrary, SQL Server can be stopped when the audit process is unusable…

PostGreSQL’s audit solution has severe limitations that make it difficult to enforce GDPR in addition to a few security holes.

28 – Resource governor

The resource governor: is a very interesting feature when some users are high consumers of data while other one needs to be served quickly. You can define, by SQL profiling a quota of disk, RAM and CPU, and in order to prioritize processes, your different categories of users…

There are no possibilities in PostGreSQL to quota the resources of certain users

29 – More cache for data than the limit of the physical RAM!

Buffer pool extension: no enough RAM for caching data? Think to set 4 to 8 times your actual RAM to a second level of cache with this feature. Of course, it needs a sort of RAMDISK, like NVMe or Intel Optane…

There is no possibilities to create a secondary level of cache in PostGreSQL.

30 – Investigate inside the DBMS engine and further

The extraordinary collection of Data Management Views (DMV) helps you to collect, understand, tune, fix, analyze… everything you want inside and outside SQL Server when running in real time. 278 views or inline table function can be queried to see what SQL server is doing or has done…

A list of these views can be download in pdf format on the Quest website:

https://www.quest.com/docs/ql-server-2017-dmv-dynamic-management-views-poster-infographic-26735.pdfSQL S

278 management view in SQL Server… A big difference compare to the 27 views of the PostGreSQL « Statistics Collector »…

31 – EXtended Events

Extended Events is a lightweight architecture that gives the possibility to investigate at a high level in the system to understand what happen while SQL Server is running.

No high-level fine grain investigation is possible in PostGreSQL. Some contribution can do a few things but they are disparate, and synthesizing all the metrics of the different contributions is not an easy task as the different formats of the data collected are heterogeneous … Of course, the sharpness of the investigations given by the SQL Server EXtended Events has nothing to do with the roughness of the possible analyzes in PostGreSQL

There is absolutely nothing equivalent to EXtended Events in PostGreSQL to troubleshoot at a high level.

32 – Database file security

While the SQL Server engine database is running there is no way to copy or drop the files of the database, because they are hooked by SQL Server process and protected by Windows (note that this feature is not available in Linux which have a different behavior). In PostGreSQL, that creates many many files for a single database, destroying a data file or an index file is easy and nothing is alerting the DBA, except that some events written in the error file! But it is too late…

33 – Easy tuning

SQL Server has always claim to be tuned automatically. So, there is few parameters to modify, and essentially when you are installing SQL Server on a machine, to deal with RAM and parallelism. 85 options are configurable but some of them are obsolete (but maintain for retro-compatibility feature) and some other automated (nothing to do).

When you modify many parameters simultaneously, SQL Server verify the concordance, except if you execute the command in WITH OVERRIDE option. In facts only 20 options are really used, and in 99% of the instances, only 5 options are configured

PG offers you 315 parameters to deal with, and no verification is made to help you to do no mistake!

34 – Monitoring tools

As we say in French « do not shoot the ambulance« . PGAdmin is the worst query tool ever. Even MySQL has a better tool! SQL Server Management Studio is, without a doubt, the best query tool! Some vendors has copied it for their solutions, like Quest with TOAD !

Many heavy enterprise monitoring tools exists for SQL Server like SentryOne SQL, Red Gate, Idera, Apex, Quest Spotlight… or lighter like Solar Winds, Paessler, DataDog, DBWatch, SpiceWorks… and sometime free like Kankuru !

Rare are similar monitoring products for PostGreSQL : Solar Winds, Paessler, DataDog… does not run at the same level that Sentry, Red Gate or Idera…

35 – Hot hardware escalation

SQL Server on Windows accepts, with a specific hardware design (HP, Dell) to have some more physical resources (CPU, RAM) without switching off the machine…

PostGreSQL has not been designed to acquire more physical resources without being extinguished beforehand.

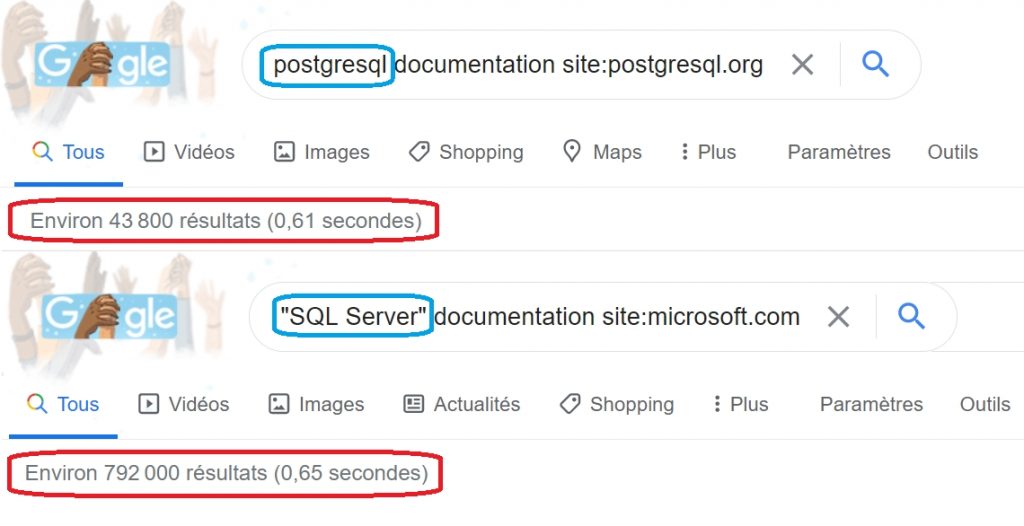

36 – Documentation